What is audio? Moreover, what is sound? These are important questions to ask and answer if you study music.

Music is often defined as ordered sound, sound being perceivable changes in air pressure.

When these changes occur with periodicity at rates between 20 and 20,000 cycles per second (Hertz/Hz), they are perceived as pitch. When they occur slower, they are perceived as rhythm, and are imperceivable when faster than ~20kHz.

Audio is an abstraction of sound.

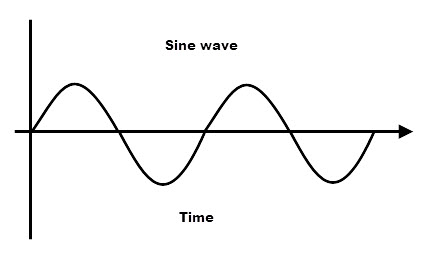

Where sound describes changes in air pressure over time, audio describes the change of an arbitrary value (known as amplitude) over time, particularly changes in the audio band of 20Hz-20kHz.

Audio allows for high-resolution representations of music in mediums other than sound, which allows for its recording and playback.

Electrification in the 20th century allowed for the representation of audio signals as changes of voltage in electric current, drastically changing many aspects of music. This included the invention of electric and electronic instruments, sound amplification, and effect processing.

Since the dawn of the 21st century, digital computers have become the standard tool for composition, recording, processing, and playback- nearly all music is touched by computers in some way.

Despite this, curriculum in digital audio is underdeveloped in many university and high school music programs.

The goal of this document is to confer a basic understanding of the underlying theory behind digital audio, as well as an understanding of the tools the medium makes available.

Digital Audio Basics

Sampling

The underlying theory of digital audio was developed in tandem with the digital computer itself.

The fundamental hurdle was how to represent continuous data as discrete data, the only kind a digital computer can operate on. The answer was found in sampling.

You may know that the illusion of continuous motion in a film is created by the rapid playback of still images known as frames. Audio sampling operates on the same principal.

A major difference is that, while frame rates for video are set between 24-60 per second, audio sample rates are typically set above 40,000 samples per second.

The reason for this high framerate is explained by the Nyquist-Shannon sampling theorem, which posits that, to accurately sample a signal, the sampling rate must be at least twice the signal's highest frequency component.

Because humans can hear up to ~20,000 Hertz, only sample rates greater than ~40,000 are considered sufficient.

Time vs. Frequency Domain Representation

Digital audio can be conceptualized and operated on in two distinct ways.

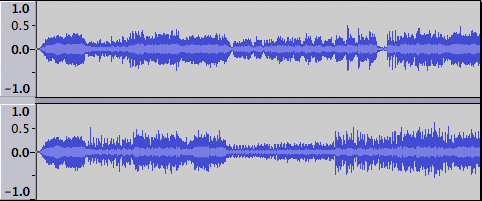

One is the time domain, which deals with samples as a sequence of discrete amplitude values.

Time domain audio data maps well to a two-dimensional graphic representation:

for each sample or timestep (typically x-axis), there is a corresponding amplitude (y-axis).

Time domain representations don't always track well to human perception of audio however, because we do not perceive amplitude or phase but instead its derivate, frequency.

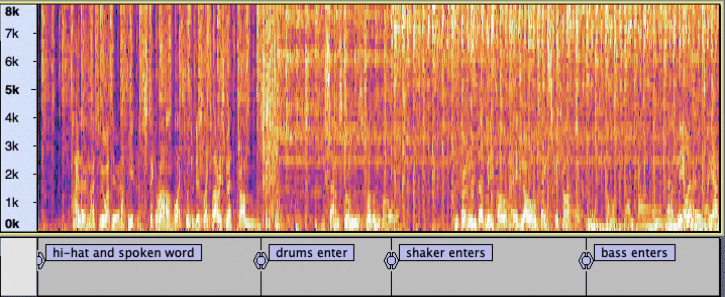

Thankfully, frequency data can be extracted from time domain data using the fourier transform, one of the most important algorithms in signal processing.

In the 18th century, mathematician Joseph Fourier proposed that any signal can be represented as a series of sinusoidal frequencies summed in different proportions. By computing the fourier transform and graphing time on the x-axis, frequency on the y-axis, and the amplitude of each frequency component as color, a frequency domain representation called a spectrogram can be created.

Basic Operations

When working with digital audio data, the two most fundamental operations are addition and multiplication.

Adding two signals is equivalent to mixing in the analog domain. To create a waveform that represents a song, the individual instrument tracks are added together.

Addition is also the basis of digital oscillators, an essential building block of audio synthesis. A digital oscillator adds to a phase value at each timestep, and resets it when a specified maximum value is reached.

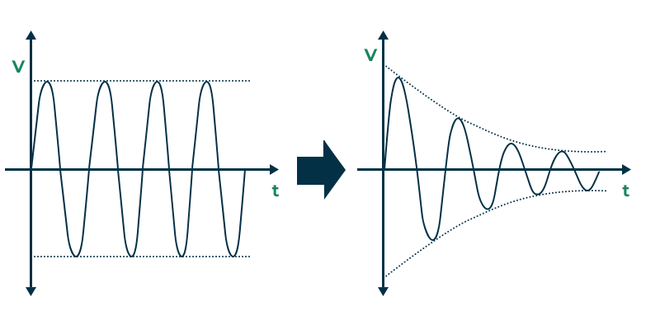

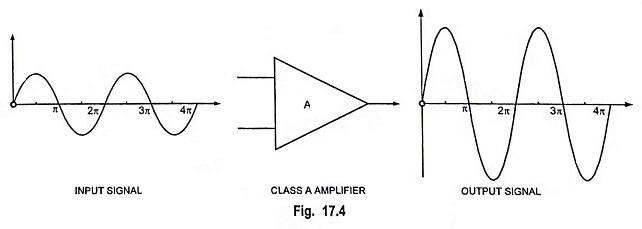

Multiplication scales the volume of samples. Multiplying every sample of an audio signal by a positive number less than 1 results in attenuation, essentially the reduction of a signal's volume. Multiplying a signal's amplitude values by a number greater than 1 represents amplification, an increase in volume.

As shown in the effects section, more complicated transformations are made by chaining together many simple addition and multiplication operations.

Synthesis

Much early work in digital audio revolved not around the processing of recorded musical signals, but instead the synthesis of sounds from scratch. Digital synthesis remains an active field of research, as the possibilities it affords are nearly limitless.

Working from the principles of fourier analysis, additive synthesis creates timbres through the addition of sine waves, and was one of the earliest used techniques. Additive synthesis requires an oscillator for every frequency component however, making it a computationally expensive technique that poses problems in the context of limited computing resources.

Frequency modulation synthesis was developed as a computationally-inexpensive alternative to additive synthesis. It allows for the creation of many frequencies from as little as two oscillators, but is less intuitive to parameterize than additive synthesis.

Less prevalent but equally interesting techniques include physical modeling, subtractive, and granular synthesis.

Signal Processing

As mentioned prior, digital audio allows for the manipulation of existing musical signals. Operations range from basic attenuation to radical alterations such as pitch correction.

When digital signal processing calculations can occur faster than human perception of discrete events in time, perceptually real-time processing is possible.

Modern computers are often capable of performing billions of operations per seconds, making real-time digital audio processing increasingly popular among practicing musicians.

A Survey of Digital Audio Tools

Effects

Equalization

refers to the attenuation of certain frequencies in an audio signal, and is one of the most ubiquitous audio effects. Modern EQ is performed with digital filters that track amplitude over time and respond according to user-friendly parameters.

Initially, filters were used to compensate for the infidelity of early recording techniques, but have since become powerful tools for both sweetening natural timbres and sculpting wholly new electronic sounds.

Dynamic range compression

is one another widely used effect. By lowering the volume of loud sections on a track, the entire track's volume can be raised without fear of clipping. This allows for increased perceptual loudness, and creates consistency in a clip's volume.

While compression is an essential tool in popular music, its overuse has created controversy in the music industry, culminating in the loudness war of the early 2000s.

It is generally recommended to use compression subtly to sweeten sounds, rather than as an effect to transform their character.

Time Effects,

like digital filters, leverage memory of a signal's past values to create output samples. Digital audio is optimal for this, because it is capable of nearly perfect recording, storage and playback of audio samples. The first commercial digital audio devices were in fact time effects, made by Eventide Inc. in the 1970s.

Reverb is a ubiquitous time effect that mimics the physical phenomenon of acoustic reflection, where audio in a room is not only heard coming from its source but also reflected from many surfaces.

Another popular time effect is delay, which repeats signal history preceding the present by an amount of time perceived as a discrete repeat.

Modulation Effects

involve the manipulation of an audio signal by a periodic function, typically an elemental waveform produced by a low-frequency oscillator (LFO). When one signal modulates another, the signal being modified is called the carrier and the signal that modifies it is called the modulator.

The simplest modulation effect is tremolo, which modulates a signal's volume. More complex modulation effects include chorus, detune and phaser.

Spectral Processing

involves the manipulation of audio data in the frequency domain.

Common use cases are convolution and

pitch-correction,

effects that are difficult to execute in the time domain.

Composition and Production

In the 21st century, streaming has become the dominant way recorded music is consumed, accounting for the majority of revenue in the US music industry since 2016. Streaming necessitates the distribution and playback of music in digital formats, which is conducive to its production in the digital domain as well.

Digital music production is typically accomplished in the context of a Digital Audio Workstation (DAW), a tool that encapsulates the functions of a recording studio in highly portable software.

DAWs allow for almost any digital signal processing imaginable, because they generally support plugins, external software meant to run inside large applications.

Another tool is music notation software, which digitizes composition in staff notation. These programs allow for real-time playback of music as it is being written, and generally accept input in the form of keyboard, mouse, and MIDI data.

Analysis Tools

Many digital audio tools involve visualization of some kind. Usually these are practical tools for measuring aspects of an audio signal like volume, frequency content or spatial image, but sometimes audio visualization can be an artistic representation used to augment performance or playback.

Applications for designing artistic visualizations include TouchDesigner, Jitter, and MilkDrop. Their increasing prevalence in EDM performance has resulted in the growth of a community of 'VJs' or 'Visual Jockeys.'

Music information retrieval is a subfield of computer audition that studies how audio data maps to music cognition. It informs the generation of music using artificial intelligence, and allows for categorization of digital audio by markers such as tempo, key, mood, and genre.

By mapping the conventions of a music system such as tonal harmony to audio data, music conforming to that system can be generated algorithmically.

Conclusion

While digital audio is a field of extensive breadth and depth, I hope this brief document can provide a comprehensive enough introduction to have readers asking the right questions.

If you are interested in learning more about digital audio, the following resources are recommended as next steps:

The Theory and Technique of Electronic Music - Miller Puckette